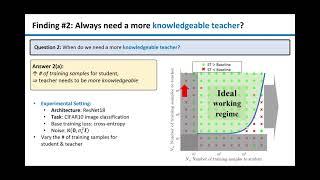

Self-Training with Noisy Student (87.4% ImageNet Top-1 Accuracy!)

Description

This video explains the new state-of-the-art for ImageNet classification from Google AI. This technique has a very interesting approach to Knowledge Distillation. Rather than using the student network for model compression (usually either faster inference or less memory requirements), this approach iteratively scales up the capacity of the student network. This video also explains other interesting characteristics in the paper such as the use of noise via data augmentation, dropout, and stochastic depth, as well as ideas like class balancing with pseudo-labels!

Thanks for watching! Please Subscribe!

Self-training with Noisy Student improves ImageNet classification: https://arxiv.org/pdf/1911.04252.pdf

EfficientNet: Rethinking Model Scaling for Convolutional Networks

https://arxiv.org/pdf/1905.11946.pdf

Billion-scale semi-supervised learning for state-of-the art image and video classification:

https://ai.facebook.com/blog/billion-scale-semi-supervised-learning/

Comments